#How to install pyspark server how to#

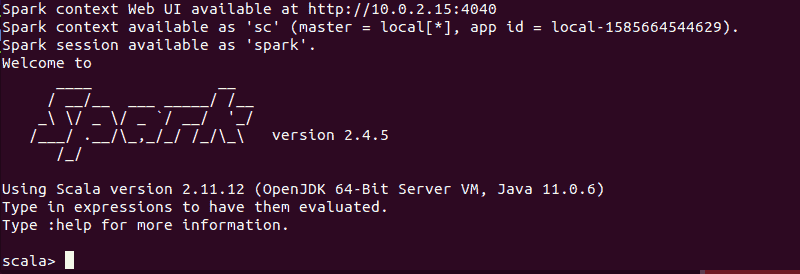

How to install PostgreSQL and pgAdmin4 on Linux If you follow the steps, you will be just fine. Installing Apache Spark on any operating system can be a bit cumbersome but there is a lot of tutorials online. PRETTY_NAME = "Ubuntu 18.04.4 LTS" VERSION_ID = "18.04" HOME_URL = "" SUPPORT_URL = "" BUG_REPORT_URL = "" PRIVACY_POLICY_URL = "" VERSION_CODENAME =bionic NAME = "Ubuntu" VERSION = "18.04.4 LTS (Bionic Beaver)" ID =ubuntu You can see my version of Linux $ cat /etc/os-release However, you can also use interactive Spark Shell where SparkSession has already been created for you). master("local") in your SparkSession, you run it locally with 4 cores), but not across several servers.įor this tutorial, I am using PySpark on Ubuntu Virtual Machine installed on my MacOS and running it on Jupyterlab (in order to use Jupyterlab or Jupyter Notebook, you need findspark library. However, you can still benefit from parallelisation across all the cores in your server (for example, when you define.

The local mode is very used for prototyping, development, debugging, and testing. The entire processing is done on a single multithreaded server (technically your local machine is a server). This means that all the Spark processes are run within the same JVM (Java Virtual Machine) effectively. While working on our local physical machine, we use the built-in standalone cluster scheduler in the local mode (Spark is also deployed through other cluster managers, such as Standalone Cluster Manager, Hadoop YARN, Apache Mesos and Kubernetes). Specially, for learning purposes, one might want tor run Spark on his/her own computer.

However, one might not have access to any distributed system all the time.

Spark should be running on a distributed computing system. PySpark is simply the Python API for Spark that allows you to use an easy programming language, like Python, and leverage the power of Apache Spark. Apache Spark is a unified open-source analytics engine for large-scale data processing a distributed environment, which supports a wide array of programming languages, such as Java, Python, and R, eventhough it is built on Scala programming language.

0 kommentar(er)

0 kommentar(er)